We say that random signals can be Gaussian white noise, but for me if the signal has a Gaussian distribution it is necesseraly a white noise because as soon as we know the distribution then $\mathbb{E}[f(t+\tau)f(t)]$ is automatically determined.

Am I wrong ?

Yes, you are wrong in various ways.

First, by Gaussian noise is meant a random process (not a random function as the OP calls it) -- that is, an infinite collection of random variables, one random variable for each time instant $t$ -- such that if we pick any $n \geq 1$ random variables $X_{t_1}, X_{t_2}, \ldots, X_{t_n}$ from the random process, then these random variables have a $n$-dimensional joint Gaussian distribution. Now, a $n$-dimensional Gaussian distribution is entirely determined by the $n$ means of the random variables and the $n\times n$ covariance matrix of the random variables, but note very carefully that nothing at all has been asserted about the means or the covariance matrix of the $n$ Gaussian random variables. Specifically, nowhere is it written that all the means must be the same or that $X_{t_i}$ and $X_{t_j}$ have the same variance or that

$\operatorname{cov}(X_{t_i},X_{t_j}) = \operatorname{cov}(X_{t_i+1},X_{t_j+1})$ etc. All that is meant by Gaussian noise is that the $n$ random variables have a jointly Gaussian distribution (which implies, among other things, that $X(t)$ and $X(s)$ individually are Gaussian random variables as well).

Second, white noise is a mythical process that is unobservable in all its glory in nature (probably just as well since it is infinitely powerful and would lead to an immediate solution to the energy crisis). We poor mortals can only observe white noise through some kind of device that necessarily limits what we can observe -- kind of like watching a solar eclipse through special glasses-- and thus what we observe is a pale imitation of the real thing. Well, it has been observed that if an observation device is modeled as a linear filter with transfer function $H(f)$, then (with an open circuit at the filter input), the filter output is a random process with power spectral density $\sigma^2|H(f)|^2$. This is consistent with an assumption that the input to the filter is a white noise process with autocorrelation function $\sigma^2\delta(t)$ where $\delta(t)$ is a Dirac delta or impulse and power spectral density $S(f) = \sigma^2, -\infty < f < \infty$ if we simply plug in $\sigma^2$ for the input power spectral density in the power spectral density equation

$$S_{\text{output}}(f) = |H(f)|^2 S_{\text{input}}(f).$$

Never mind that many mathematicians will cringe at the cavalier treatment where we are ignoring that the above formula

implicitly assumes that the input process is a finite power process (which white noise is definitely not); we are engineers and we don't care. We are used to treating impulses like ordinary functions.

Finally, turning to discrete-time random processes, remember that one cannot sample the mythical beast called white noise -- it does not exist in nature -- and the sampler is necessarily a device that observes the random process for a very short but nonzero time $\varepsilon$, and thus the sample $X[n]$ is actually something proportional to $\int_{nT-\varepsilon/2}^{nT+\varepsilon/2}X_t \mathrm dt$ which has variance $\sigma^2\varepsilon$ if $\{X_t\}$ is a white noise process. So,

A discrete-time white noise process is a collection of zero-mean independent identically distributed random variables $X[n]$.

A discrete-time white Gaussian noise process is a collection of zero-mean independent identically distributed Gaussian random variables $X[n]$.

Yes, many DSP texts (as well as Wikipedia's definition of a discrete-time white noise process) and many people with much higher reputation than me on dsp.SE say that uncorrelatedness suffices for defining a white noise process, and in the case of white Gaussian noise it does because Gaussianity brings in the jointly Gaussian property: a discrete-time Gaussian random process is defined as a sequence of random variables $\{X[n]\colon n \in \mathbb Z\}$ such that any set of $M\geq 1$ random variables $X[n_1], X[n_2], \ldots, X[n_M]$ enjoys a jointly Gaussian distribution, and so for white Gaussian noise, uncorrelatedness implies independence. However, for arbitrary white noises, it is best to insist on independence and not on just zero correlation. For the edification of all these important people who insist that uncorrelatedness is adequate, I present a discrete-time process in which every random variable is a Gaussian random variable, any two random variables are uncorrelated but are not necessarily independent, and not all sets of variables in the process enjoy a jointly Gaussian distribution. In short, the process is not a white Gaussian noise process as per the standard definition. And why should all this matter in the least? Well, in typical applications we apply various mathematical operations on processes, and if $X[0]$ and $X[1]$ are uncorrelated Gaussian random variables and we cannot rely on $X[0]+X[1]$ also being a Gaussian random variable, things have come to pretty pass, and it is not a world I want to live in.

Example: Let $X$ be a $N(0,1)$ random variable and $B$ a discrete random variable that takes on values $+1$ and $-1$ with equal probability $\frac 12$ and independent of $X$. Set $Y = BX$ and note that $E[Y]=E[BX]=E[B]E[X]=0$. Furthermore,

$E[XY] = E[X^2B] = E[X^2]E[B] = 0$, and so $X$ and $Y$ are uncorrelated random variables. But what is the distribution of $Y$? Well,

\begin{align}

P(Y \leq a) &= P(Y\leq a \mid B=+1)P(B=+1) + P(Y\leq a \mid B=-1)P(B=-1)\\

&= \frac 12 P(BX\leq a \mid B=+1) + \frac 12 P(BX\leq a \mid B=-1)\\

&= \frac 12 P(X\leq a) + \frac 12 P(X\geq -a)\\

&= \frac 12 \Phi(a) + \frac 12 \Phi(a)\\

&= \Phi(a),

\end{align}

that is, $Y$ is also a $N(0,1)$ random variable!! But $X$ and $Y$ are not jointly Gaussian random variables. Note that conditioned on the value of $X$ being $\alpha$, $Y$ is a discrete random variable that takes on values $\pm\alpha$ with equal probability: with joint Gaussianity, $Y$ would have been a Gaussian random variable.

With this as background, let $\{X[2n]\colon n \in \mathbb Z\}$ be a set of independent identically distributed zero-mean Gaussian random variables, that is, a standard white Gaussian noise process on the even integers. Let $\{B[n]\colon n \in \mathbb Z\}$ be an independent process where the $B[n]$'s are independent discrete random variables that take on values $+1$ and $-1$ with equal probability $\frac 12$. Set

$X[2n+1] = X[2n]B[n]$ and note that each pair $(X[2n],X[2n+1])$ is a pair of uncorrelated zero-mean Gaussian random variables that are not jointly Gaussian. Now let's look at the random process

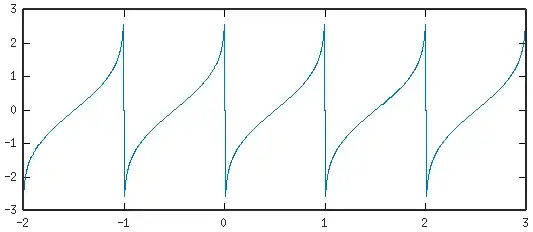

$\{X[m]\colon m \in \mathbb Z\}$ in which all the random variables are zero-mean Gaussian with the same variance. Any pair of random variables is uncorrelated: $X[2n]$ and $X[2n+1]$ by construction and all the more distant pairs because of independence. But, not all pairs of random variables have a jointly Gaussian distribution and so this is not a white Gaussian noise process in the usual sense of the term; ymmv.