We have timeseries data from several sensors in a table and I am interested in getting N equally-spaced samples from the full range, as the whole data would be too much to display in a web-app.

I am currently using the following query for N = 500:

SELECT sensor_id, sensor_data_id, val1, val2, datetime

FROM (

SELECT sensor_id, sensor_data_id, val1, val2, datetime

, ROW_NUMBER() OVER (ORDER BY datetime)

FROM sensordata

WHERE sensor_id = 22

) x

WHERE mod(ROW_NUMBER, (SELECT COUNT(1) / 500 FROM sensordata WHERE sensor_id = 22)) = 0;

The table contains val1 and val2 for several timestamps per sensor_id (roughly 2M rows for sensor_id = 22). The table has primary key (sensor_data_id) and the following other indices - (sensor_id) and a composite index (sensor_id, date_time).

The above query roughly takes 36s and the result size for sensor_id = 22 is around 278 MB, found using (SELECT pg_size_pretty( sum(pg_column_size(sensordata))) FROM sensordata WHERE sensor_id = 22;). While the above query does not consider a time-range, I want to eventually add another condition in the WHERE clause to downsample the data for a selected datetime range for a particular sensor_id.

I have tried EXPLAIN ANALYZE a couple of times, and the only hint that seemed to help a little was increasing the work_mem which is 300 MB now. The database does not need to serve a lot of connections simultaneously, so this high a number is not an issue currently.

Are there more efficient ways to achieve the above? The table sensordata is likely to grow in time. Currently around 7 GBs for 121 distinct sensor_id.

About PG: "PostgreSQL 12.11 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 7.3.1 20180712 (Red Hat 7.3.1-12), 64-bit"

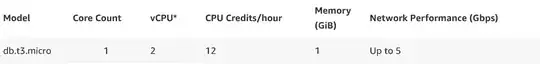

Running on: AWS RDS db.t3.micro which has the following specs:

EXPLAIN (ANALYZE, BUFFERS)output. – Laurenz Albe Dec 14 '22 at 04:31