Here are several perfmon counters you can use as a starter.

Memory Grants Pending - This is a counter that tells you if there any queries waiting for a memory grant (memory allocation). This should actually be 0. If it is consistently more than that, you have an issue.

Page Life Expectancy - This is the estimated amount of time in seconds that a page stays in memory. The higher the better, but there is a formula to calculate what the minimum value for your serer should actually be. It used to be 300 seconds minimum, but this is an old calculation and should nowadays be 100 seconds per GB. I got this from Richard Douglas during a SQL Saturday session, so credits to him. He works for SentryOne. Less than that value tells you there is too little memory assigned. Also use this counter in conjunction with Checkpoint pages/sec. Be aware that every NUMA node has it's own PLE value (if you have multiple NUMA nodes on your SQL Server). SQL Server starts deviding resources into (soft) NUMA nodes above 8 cores assigned.

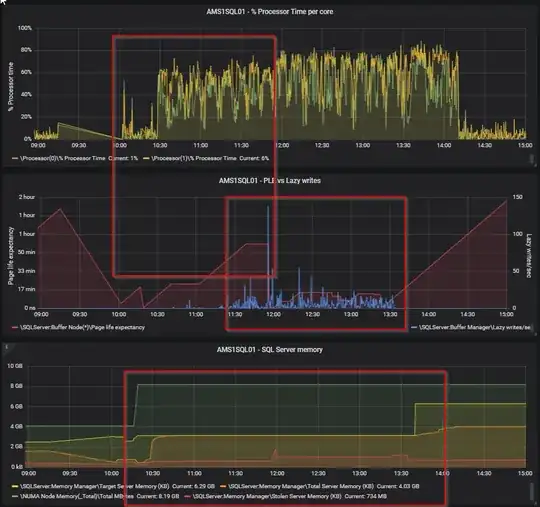

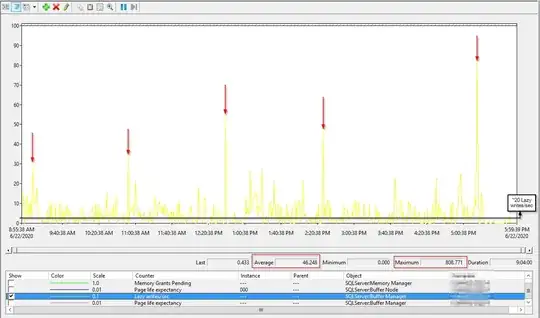

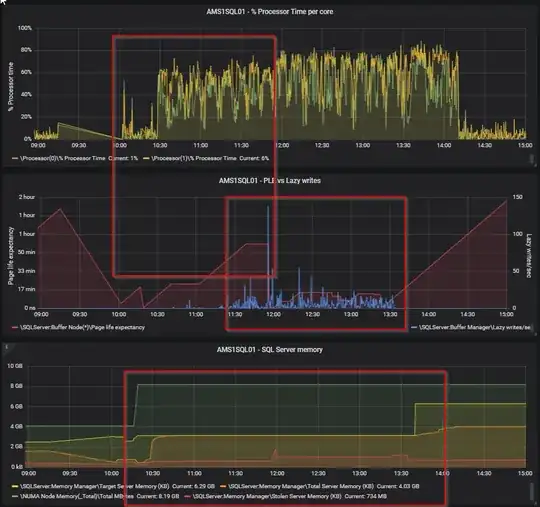

Lazy writes/ sec - The lazy writer process clears out old pages from the cache when SQL Server experiencs memory pressure. Constantly above a value of 20 is a problem (got this from Richard Douglas as well). However, use it in conjunction with Page Life Expectancy. If you see a high PLE together with a spike in lazy writes/sec, then something is causing SQL to remove pages from it's cache and insert new pages. See the screenshot below for an example from my home lab.

Iam sure that some experts here know a little more about the memory clerks, still on my list to dive in deeper, so maybe someone has some additional info for you (in which I am very much interested as well :-) ).

Edit: you can also use sys.dm_os_performance_counters to get them on the fly with an query if you like.

Edit 24 June 2020:

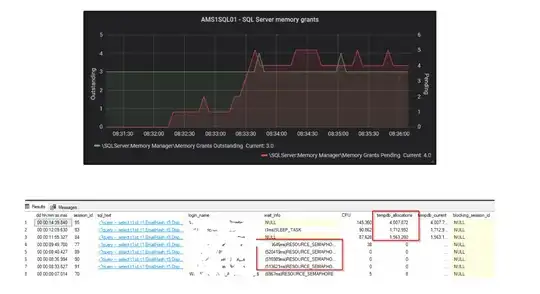

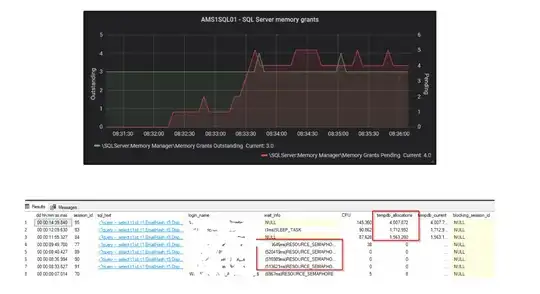

@J.D. regarding your comment of June 23; i digged in a little deeper into memory pressure as well, because of the comments of @Dominique Boucher and this article: https://www.brentozar.com/archive/2020/06/page-life-expectancy-doesnt-mean-jack-and-you-should-stop-looking-at-it/. I was laughing when I got this in my mailbox; maybe he saw this post. :-) The article is telling us to stop looking at it. Well, although Brent is surely a lot more experienced then me, I don't think I can totally agree with his statement to never look at it. I get his point within the context of his sp_BlitzFirst, a single query using max 25% of the buffer cache, it is a lagging indicator, etc. but for trend analysis and history I would still look at PLE vs Lazy Writer. If I want to determine if a server has memory pressure over time, this is what i would use in combination with the waiting memory grants. Also, monitoring tools from RedGate and Quest still use this. Now @Dominique Boucher says to look at the RESOURCE_SEMAPHORE waits, which I agree on, but this will most likely be in line with the number of pending memory grants (which you can easily register with perfmon). If you have a constant queue of memory grants (it works with a FIFO queue), then you do have memory pressure.

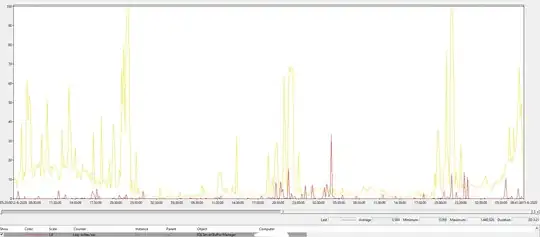

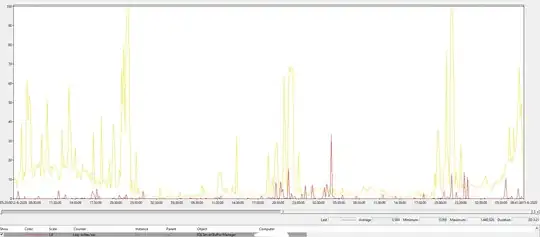

As a reference, this is Lazy Writer for a certain system in this universe (32 GB mem, 1 TB database, although the type of workload is also very important). Yellow is batch requests p/s, 10 indicates a 1000, so you can see it is definitely not idle.

here is also a snapshot from my home lab regarding memory grants vs RESOUCE_SEMPAPHORE waits (and I also see I highlighted the tempdb writes, that was what david was talking about, too little mem, so spill to tempdb):

Now, looking at your perfmon counters, I think that you could definitely have a memory issue. I mean; something is constantly forcing SQL Server to remove pages from the buffer cache. If this would be a single time, ok, but it seems it is constantly busy doing that... However, I would like to see them compared to PLE. This can be a clear indication there is memory pressure ( over time I still think so). Secondly, you also want to look at pending memory grants. Now, I didn't say this before, but looking back I do think you want to look at the wait stats as Brent and Dominque are saying. However, that is a little more difficult over time. Wait stats are gathered cummulative, so you need to clear them first (which I don't prefer) and then look at them if the RESOURCE_SEMAPHORE are adding up.

To clear the wait stats: https://learn.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-sqlperf-transact-sql?view=sql-server-ver15

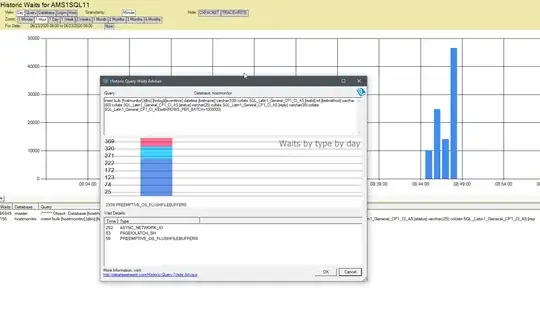

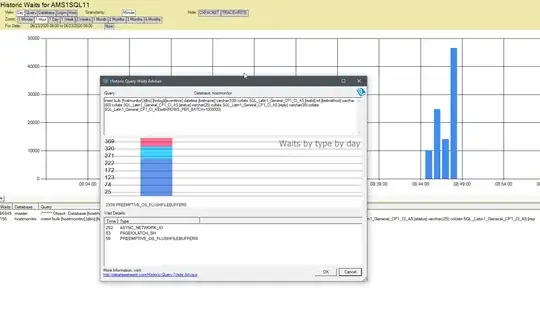

You can also use sp_BlitzFirst to monitor it, but that is only a snapshot from the point of time your are running it. sp_BlitzFirst (or sp_Blitz i cannot recall) has an option to record this periodically in a table, so you could look at that as well. Or just querying dm_os_wait_stats yourself in some other way also works. In general, my personal preference is to collect data over time to analyze this. I do this using Database Health Monitor from Steve Stedman: databasehealth.com. Screenshot from my home lab:

This way you can monitor wait stats a lot better, although it will cost you a tiny bit of resources.

If you have SQL Server 2017 or above (we don't have that yet in our env.), then you can also use Query Store. As of SQL Server 2017 Query Store also records wait stats (it is a configurable option). Be carefull though, I have read stories of Query Store bringing very busy servers to their knees (you can monitor that with wait stats :-P ). You should always test before implementing functionality in prod of course. We do use it, and works great, but we have 2016, so we do miss the wait stats option :-(.

By the way; my tactic would be to gather information (perfomon, wait stats if possible), if you think there is memory pressure upgrade the RAM (if a VM that would be quite easy), gather performance indicaters afterwards and check if they have improved. Bit of a no brainer, but the latter is often forgotten or not done very well.

Usually, when you have not enough memory, you would get waits like "RESOURCE_SEMAPHORE". It does automatically means that you need more RAM. Sometime, it could be fix by improving the queries or adding the right indexes.

How big is your database and how much RAM is assign to the SQL (maximum_memory) ?

– Dominique Boucher Jun 12 '20 at 18:30