I have a postgres database that has the following structure:

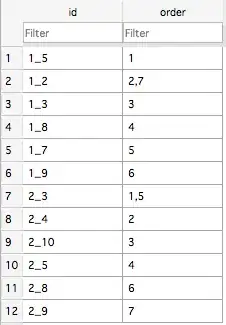

where the fields in column1 and column2 can take any value and where the order column indicates the order in which the original data was inserted. This table contains a lot of rows (with lots of duplicates) and I'm wondering whether there is a smarter way to store this data. Suppose that I were to create a unique id for each value per column and store the data like this:

Suppose that n is the number of rows in the first table and u the number of unique rows in the first table. If u is relatively large then the order column in the second table will have relatively short values in the order column fields, and if u is relatively large the fields in the order column in the second table will be quite wide. I would like to be able to compare the best storage method in both cases.

Question: I want to be able to compare these two tables to determine which storage method is better; is there a formula/method to find out how columns vs rows take up memory?