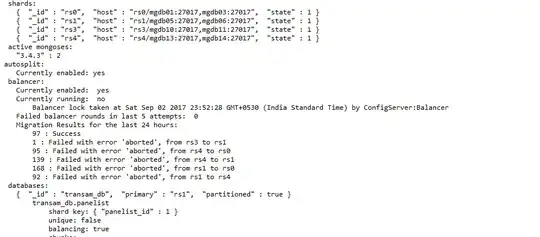

aborted in mongodb

in logs i found

2017-09-14T01:35:21.533+0900 W SHARDING [conn38309] Chunk move failed :: caused by :: ChunkRangeCleanupPending: can't accept new chunks because there are still 4 deletes from previous migration

2017-09-14T01:35:11.439+0900 I COMMAND [conn38309] command admin.$cmd command: moveChunk { moveChunk: "transam_db.panelist", shardVersion: [ Timestamp 762000|1, ObjectId('590b4564c5ac1ee3b6e9050d') ], epoch: ObjectId('590b4564c5ac1ee3b6e9050d'), configdb: "configReplSet/mgdb07:27019,mgdb08:27019,mgdb09:27019", fromShard: "rs1", toShard: "rs4", min: { panelist_id: 407157 }, max: { panelist_id: 416836 }, chunkVersion: [ Timestamp 762000|1, ObjectId('590b4564c5ac1ee3b6e9050d') ], maxChunkSizeBytes: 67108864, waitForDelete: false, takeDistLock: false } exception: can't accept new chunks because there are still 4 deletes from previous migration code:200 numYields:75 reslen:278 locks:{ Global: { acquireCount: { r: 161, w: 3 } }, Database: { acquireCount: { r: 79, w: 3 } }, Collection: { acquireCount: { r: 79, W: 3 } } } protocol:op_command 2622ms

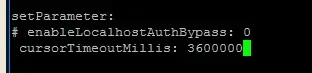

Could you give me any solution regarding this and i am get this after the changes we have done in set paramteters in replicasets and config servers and mongos