Since varchar anyways allocate space dynamically, my question is whether using varchar(255) be more efficient or save more space compared to using varchar(5000). If yes, why?

- 31,278

- 13

- 101

- 147

- 503

- 1

- 4

- 7

-

Do you need a 5000 character wide column? If so WHY? Would a varchar (MAX) column work better for you here? – Richard L. Dawson Jan 24 '17 at 19:10

1 Answers

Yes, varchar(5000) can be worse than varchar(255) if all values will fit into the latter. The reason is that SQL Server will estimate data size and, in turn, memory grants based on the declared (not actual) size of the columns in a table. When you have varchar(5000), it will assume that every value is 2,500 characters long, and reserve memory based on that.

Here's a demo from my recent GroupBy presentation on bad habits that makes it easy to prove for yourself (requires SQL Server 2016 for some of the sys.dm_exec_query_stats output columns, but should still be provable with SET STATISTICS TIME ON or other tools on earlier versions); it shows larger memory and longer runtimes for the same query against the same data - the only difference is the declared size of the columns:

-- create three tables with different column sizes

CREATE TABLE dbo.t1(a nvarchar(32), b nvarchar(32), c nvarchar(32), d nvarchar(32));

CREATE TABLE dbo.t2(a nvarchar(4000), b nvarchar(4000), c nvarchar(4000), d nvarchar(4000));

CREATE TABLE dbo.t3(a nvarchar(max), b nvarchar(max), c nvarchar(max), d nvarchar(max));

GO -- that's important

-- Method of sample data pop : irrelevant and unimportant.

INSERT dbo.t1(a,b,c,d)

SELECT TOP (5000) LEFT(name,1), RIGHT(name,1), ABS(column_id/10), ABS(column_id%10)

FROM sys.all_columns ORDER BY object_id;

GO 100

INSERT dbo.t2(a,b,c,d) SELECT a,b,c,d FROM dbo.t1;

INSERT dbo.t3(a,b,c,d) SELECT a,b,c,d FROM dbo.t1;

GO

-- no "primed the cache in advance" tricks

DBCC FREEPROCCACHE WITH NO_INFOMSGS;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

GO

-- Redundancy in query doesn't matter! Just has to create need for sorts etc.

GO

SELECT DISTINCT a,b,c,d, DENSE_RANK() OVER (PARTITION BY b,c ORDER BY d DESC)

FROM dbo.t1 GROUP BY a,b,c,d ORDER BY c,a DESC;

GO

SELECT DISTINCT a,b,c,d, DENSE_RANK() OVER (PARTITION BY b,c ORDER BY d DESC)

FROM dbo.t2 GROUP BY a,b,c,d ORDER BY c,a DESC;

GO

SELECT DISTINCT a,b,c,d, DENSE_RANK() OVER (PARTITION BY b,c ORDER BY d DESC)

FROM dbo.t3 GROUP BY a,b,c,d ORDER BY c,a DESC;

GO

SELECT [table] = N'...' + SUBSTRING(t.[text], CHARINDEX(N'FROM ', t.[text]), 12) + N'...',

s.last_dop, s.last_elapsed_time, s.last_grant_kb, s.max_ideal_grant_kb

FROM sys.dm_exec_query_stats AS s CROSS APPLY sys.dm_exec_sql_text(s.sql_handle) AS t

WHERE t.[text] LIKE N'%dbo.'+N't[1-3]%' ORDER BY t.[text];

So, yes, right-size your columns, please.

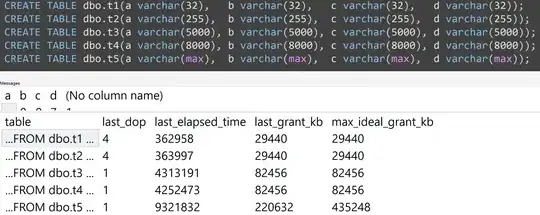

Also, I re-ran the tests with varchar(32), varchar(255), varchar(5000), varchar(8000), and varchar(max). Similar results (click to enlarge), though differences between 32 and 255, and between 5,000 and 8,000, were negligible:

Here's another test with the TOP (5000) change for the more fully reproducible test I was being incessantly badgered about (click to enlarge):

So even with 5,000 rows instead of 10,000 rows (and there are 5,000+ rows in sys.all_columns at least as far back as SQL Server 2008 R2), a relatively linear progression is observed - even with the same data, the larger the defined size of the column, the more memory and time are required to satisfy the exact same query (even if it does have a meaningless DISTINCT).

- 159

- 7

- 180,303

- 28

- 400

- 614

-

this is really surprising. Would the difference between

varchar(450)andvarchar(255)be the same? (Or anything below 4000?) – Jan 24 '17 at 19:26 -

@a_horse_with_no_name I haven't tested all permutations of runtime performance, but the memory grant would be a linear progression - it is simply a function of

rowcount*(column_size/2). – Aaron Bertrand Jan 24 '17 at 19:35 -

That's pretty disappointing then. I though that modern versions of SQL Server don't suffer from that (as long as the defined length is smaller then 8000 or maybe 4000). – Jan 24 '17 at 19:40

-

1@a_horse_with_no_name Well, it has to make a guess at how wide the data is so that it avoids spills. How else should it guess? It can't scan and read the entire table to determine avg/max lengths of all variable width columns as a precursor to generating an execution plan (and even if it could, it would only be able to do that during a recompile). – Aaron Bertrand Jan 24 '17 at 19:50

-

Doesn't SQL Server keep statistics about that? This seems rather strange. At least Oracle and Postgres do not care (for Oracle as long as the limit is below 4000 byte) – Jan 24 '17 at 19:51

-

I ran this on SQL Server Express 2014 and there is indeed a big performance difference even between nvarchar(150) and nvarchar(300). (Can't check

last_dop,last_grant_kbormax_ideal_grant_kbas those columns don't exist there) – Jan 24 '17 at 20:00 -

@a_horse_with_no_name So do Oracle and Postgres just grant all the memory, as if every value was 4000 bytes or whatever the declared size happens to be? I don't know if that's good either. SQL Server doesn't maintain statistics about string lengths except in the case of key size, but then the column would have to be in an index key, and that eliminates any column definition > 900 bytes (though that increases to 1700 in SQL Server 2016). – Aaron Bertrand Jan 24 '17 at 20:01

-

Not sure what you mean with "grant the memory". But it seems this behaviour applies to

nvarcharonly. If I run your script withvarcharcolumns there is no difference between the two tables – Jan 24 '17 at 20:04 -

@a_horse_with_no_name SQL Server uses memory to satisfy a query, and every query is granted memory based on how much data is expected to be handled. – Aaron Bertrand Jan 24 '17 at 20:05

-

@a_horse_with_no_name - Try testing varchar(300) and varchar(600). I suspect that it is a 512 byte size break issue based on past experience with a similar issue, but I'm not anywhere I could test that hypothesis right now. – Laughing Vergil Jan 24 '17 at 20:17

-

2Oracle keeps statistics about e.g. the average row length, the min and max values for each column as well as a histogram. Postgres keeps very similar statistics (it doesn't record min/max though but frequencies). For neither of them there is any difference whatsoever between nvarchar(150), nvarchar(2000) or varchar(400) in performance. – Jan 24 '17 at 20:29

-

This demo is not fully reproducible (why populating tables based on sys.all_columns and not CTE?) but at least when I tried to reproduce it the execution plans were different. – David דודו Markovitz Jan 24 '17 at 20:29

-

@Dudu because the method of populating the sample data used to demonstrate the answer to the question (never mind exactly how many rows are in there) is completely irrelevant. I've changed it to TOP (5000) but it really doesn't matter what you use - the point here was not to have some contest about the fastest way to populate sample data to demonstrate a problem. – Aaron Bertrand Jan 24 '17 at 20:30

-

Your post is very interesting, I also wonder about correct size. I tried to reproduce the results but failed. https://pastebin.com/aB7j5YEn The simple SELECT just is totally random in execution time, so I made it more complicated like you. There is a good correlation between varchar size and memory usage. But none whatsoever (it seems) to execution time. I used SQL Server 2017. – Andreas Reiff May 15 '19 at 13:24

-

1@AndreasReiff Because memory is fast. In isolation you are not going to notice the difference of using slightly more memory for an individual query. But memory is finite. If you have 500 people running the same query, you'll notice the difference - some may run just as fast, but others will get starved out, have to wait for grants, or have to spill. – Aaron Bertrand May 07 '20 at 19:55