Consider the following process:

There are $n$ bins arranged from top to bottom. Initially, each bin contains one ball. In every step, we

- pick a ball $b$ uniformly at random and

- move all the balls from the bin containing $b$ to the bin below it. If it already was the lowest bin, we remove the balls from the process.

How many steps does it take in expectation until the process terminates, i.e., until all $n$ balls have been removed from the process? Has this been studied before? Does the answer follow easily from known techniques?

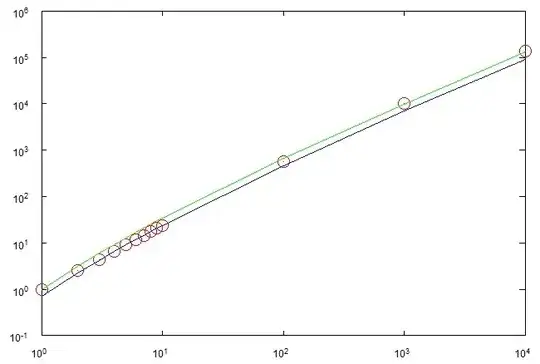

In the best case, the process can finish after $n$ steps. In the worst case it can take $\Theta(n^2)$ steps. Both cases should be very unlikely though. My conjecture is that it takes $\Theta(n\log n)$ steps and I did some experiments which seem to confirm this.

(Note that picking a bin uniformly at random is a very different process that will obviously take $\Theta(n^2)$ steps to finish.)