I wrote a 2D fluid-solver in OpenGL (code here) some time back.

While it runs flawlessly on my onboard Intel GPU,

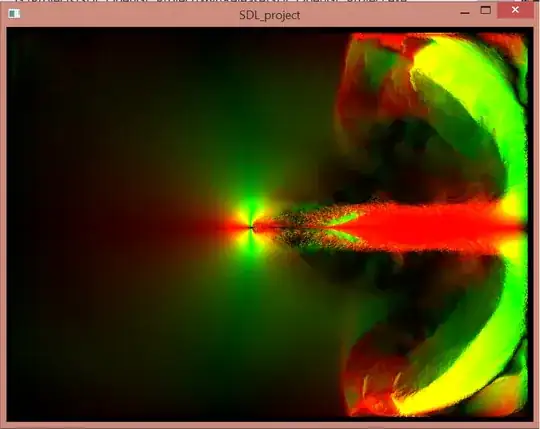

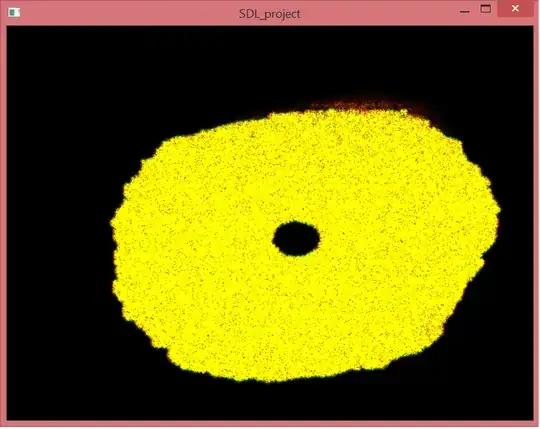

the simulation very quickly "blows-up" when same code is run using nvidia card :

In the second picture, the fluid is being "added" to the system and "diffusing away" too, but unlike the 1st picture there is no advection.

I would like to know what might possibly cause this. Could this be because different vendors might be interpreting the standard differently?

PS : The "red" and "green" colors represent magnitude of vector-field in x and y directions respectively.