Suppose we already have UV coordinates assigned for mesh vertices, how is texture baking implemented?

I guess it will be something like this:

for each coordinate (u, v) in parameter space:

(x, y, z) = inverse(u, v) # Get the geometric space coordinate.

f = faces(x, y, z) # Get the corresponding face. We may need face's normal for rendering.

pixels[u, v] = render(x, y, z, f)

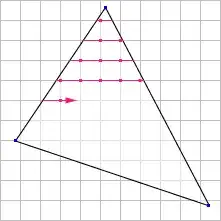

What is the inverse function? Is it a projective transformation, or a bilinear transformation?

And how to get the corresponding face of one coordinate (u, v) efficiently?