I'll be generating images in python and then uv-mapping them to a sphere and hope to automate it - possibly even a new image each frame coordinated with the sphere shape keyframed as well. uv-mapping was quite easy when I did it here with a NASA image, but when I tried again with PNG images that were saved using plt.imsave('img2', img2) blender seems to want to put the "poles" somewhere on the equator.

update: The NASA images don't work now either. I recently moved to a new computer environment (clean install) and installed the most recent Blender.

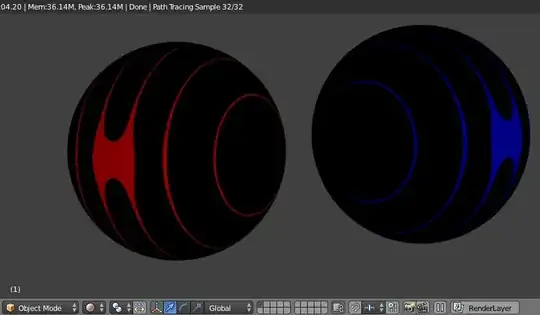

Below are the images shown a few ways to make sure I have the orientations in both "tall" and "wide" format. The shapes of the numpy array generating the images are [1201, 2401, 4] and [2401, 1201, 4] where the third index represents R, G, B, A. The pattern is calculated so that the lines are constant thickness from "equator" to "pole" after uv-mapping to a sphere.

I used the GUI to make the first sphere and set the parameters, connected the Nodes material, then copy/pasted a second sphere and edited the image file. I haven't rotated the spheres at all.

While I can half-fix this by adding a mapping node as @Gandolf3 shows, I really want the direction of the lines to reliably map to the top and bottom of the uv-sphere mesh object - being the place where all the edge loops meet.

Is there some way I can get Blender (here) to always uv-map the long edges of the image, or at least the top/bottom edges reliably to the poles of the uv-sphere? If I do this a lot I may try to automate it, and images may differ in size/shape slightly I'd like to get a handle on this!

Here are img2.png and img3.png to play with!

Nodes:

uv-spheres

Rendered:

the two images

This Doesn't Help! (all permutation of x, y, and z 90deg rotations)