I think the vocabulary size in LLMs makes two trade-offs:

- The bigger tokens you have, the less frequent they will be.

- The more tokens you have, the more parameters you dedicate to input and output.

I'm looking for a chart of the effect of the tokenizer vocabulary size on some quality metric. It could be a chart with a fixed number of total parameters, so both trade-offs have an impact on quality. Or it could be a chart where the architecture is fixed and the number of parameters grows with vocabulary size, to only show the first trade-off.

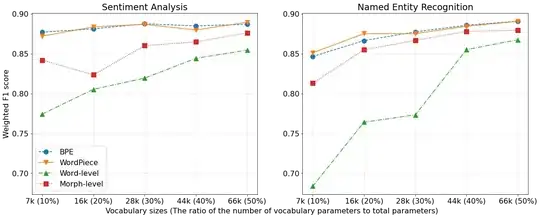

The best I could find is this chart from Impact of Tokenization on Language Models: An Analysis for Turkish. But it only shows increasing quality. Surely at some point increasing the vocabulary starts to have a negative effect on quality?