As this question says:

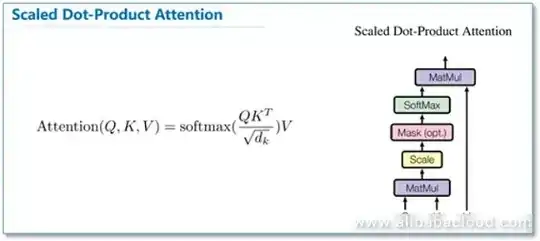

In scaled dot product attention, we scale our outputs by dividing the dot product by the square root of the dimensionality of the matrix:

The reason why is stated that this constrains the distribution of the weights of the output to have a standard deviation of 1.

My question is why don't we do the same after multiplying to $V$(values) for the same reason?