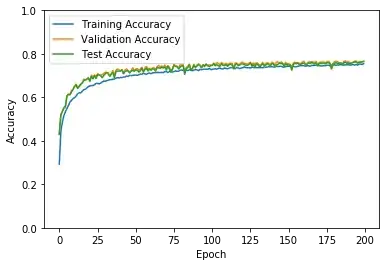

Is this due to my dropout layers being disabled during evaluation?

I'm classifying the CIFAR-10 dataset with a CNN using the Keras library.

There are 50000 samples in the training set; I'm using a 20% validation split for my training data (10000:40000). I have 10000 instances in the test set.

model.summary()) the loss function and which accuracy metric are you falling. The validation and test accuracies are only slightly greater than the training accuracy. This can happen (e.g. due to the fact that the validation or test examples come from a distribution where the model performs actually better), although that usually doesn't happen. How many examples do you use for validation and testing? – nbro Mar 04 '20 at 14:06